Getting Insights from Jira With LLMs Part 1: Architecture

Feb 2, 2024

For better or for worse, Jira is the tool that many technical organizations rely on to organize their projects. It's a great tool, no doubt, but getting visibility on projects that don't fit neatly into Jira's way of doing things can get messy. Even seasoned Jira jockeys can find it difficult to quickly get insights, and may end up building a bunch of tricks and hacks that help them do their job better. What's even more painful is manually writing reports for leadership about project status as it's reflected in Jira. Here's how we built an MVP that tackled these pain points by combining some analysis with some LLM magic.

Recap of the problem

You may already know about Tangential and the problem we were trying to address, but I’ll recap it here. Through our user interviews with TPMs (technical program managers) we learned that to get a good picture of a project as it exists in Jira, understanding what threatened to delay delivery, and reporting that status back to executives and other stakeholders is a very manual task.

Apart from meetings that TPMs held to gather status and understand where the risks of a project were, they relied on Jira to get insights on what epics were at risk of not being completed, and what that would mean for the overall health of their programs.

Many TPMs we talked to had all sorts of hacks that helped ease this pain. They wrote scripts that would fetch information that dumped Jira data into a Confluence page, or would export data to a CSV that would then be loaded into a spreadsheet; one company even loaded their entire Jira database into Snowflake and built dashboards on that data. We got strong signals that they sought better visibility on their projects and to make it easier to report on issues.

From the information we gathered through our user interviews, we decided to build an MVP that addressed these problems.

Features for our MVP

We wanted to address the following pain points:

- Visibility across multiple projects or programs can be difficult and time consuming to achieve

- Writing reports that are given to executives about a program’s status is tedious, especially when different reports need to be written for different audiences

We needed to limit the scope of our MVP to only the features that would test if we could address the pain points effectively. The initial features that we would build were:

- A way to define a project or program by entering a JQL (Jira Query Language) query and a name

- Ability to analyze a project by critical metrics

- Project analysis dashboard

- Report generation that could write a text based report for different audiences (VP of engineering, CPO, etc)

- Ability to edit and share the text report

We believed these features would address the pain points by automating away a lot of the tedious parts of their day to day.

Crunching numbers

To analyze a project, we had to compute some numbers that would help understand what the current status was and give clues to potential problems that could cause it to be delayed. We decided to focus on:

- Total epic story points

- Remaining epic story points

- Current velocity of the project

- Time in status (how long has a Jira ticket or epic has been in a certain state)

- Scope changes (has the number of story points increased, when, how much, and by whom)

- Changes to the epic and to its child issues (was the assignee changed, changes to description, etc)

- Comments before and after a pivot date

These calculations would be performed in code, from data fetched from the Jira API.

How it needed to work

I started sketching out how these features would need to be built in order to work and handle at least medium-sized Jira projects. I thought this was how project analysis could work:

- User authenticates with Atlassian, providing us with a token for read access

- Web interface shown where users can define their projects via JQL query

- Project analysis is triggered by the web UI

- The backend starts by pulling in every epic from the JQL query via the Jira API and enqueuing the epic to be processed

- Using API data, each epic is analyzed in depth and a summary is written for each epic with an LLM

- The analysis is added to the database

- Once every epic is analyzed, a summary of all epics is made for the project

And on the report generation side:

- User selects a project and an audience (VP of engineering, VP of product, etc) that they want to update

- Key metrics are extracted from each epic in the project, along with the generated text summary

- LLM is prompted with this information, tailored to the selected audience based on a template

- Report is stored in the database and can be edited by the user

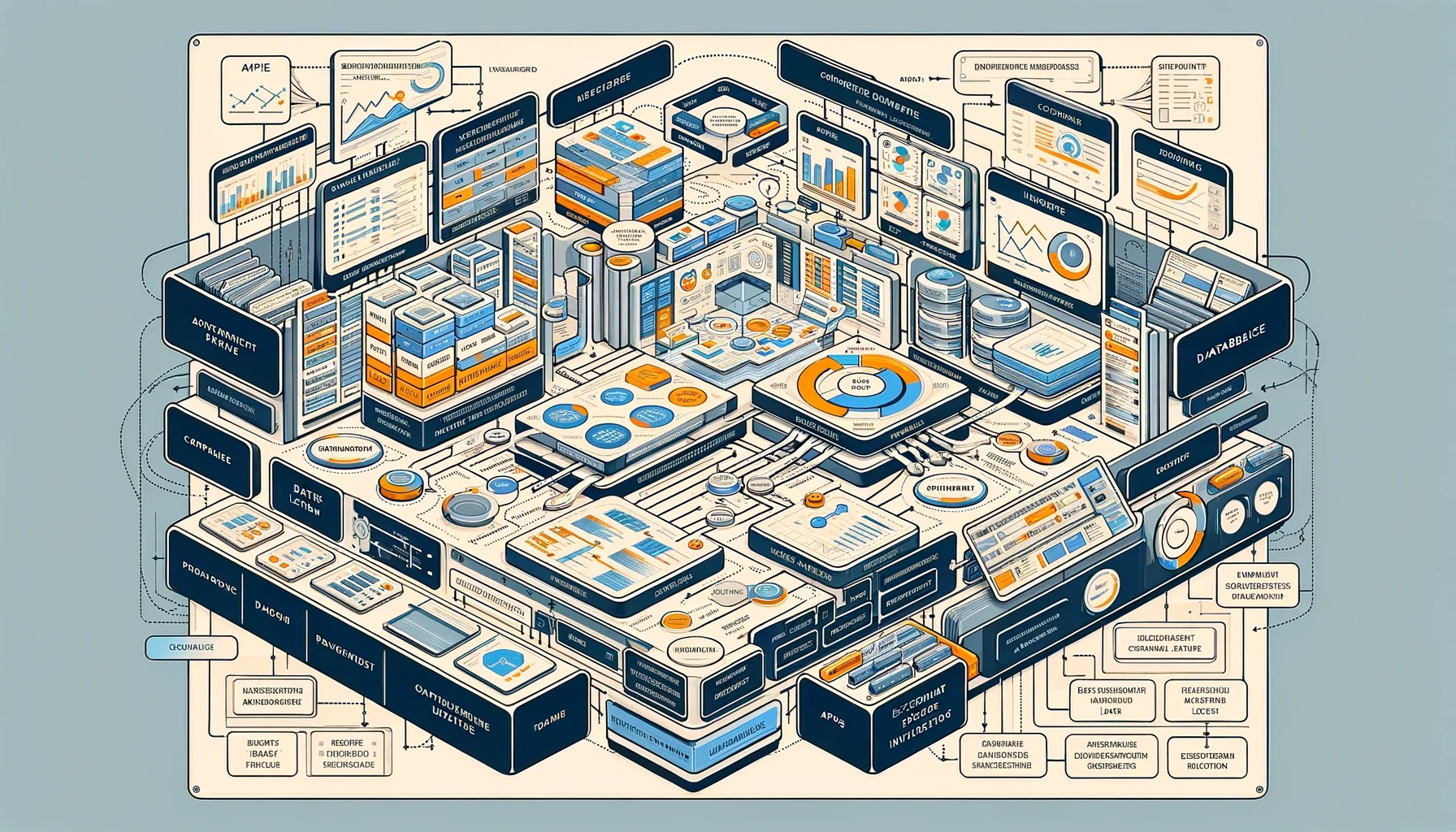

Architecture

To make this work for our MVP, I decided to build the stack like this:

Serverless TypeScript/Node.js deployed on AWS Lambda for number crunching and analysis

Serverless in this use case is perfect for several reasons. I knew that the analysis of Jira data would undoubtedly take a long time and could be split into smaller chunks of work, which is where serverless can really shine. When analysis started, many lambda functions could be spun up to handle the small chunks, hitting the Jira API and doing calculations and writing to the database.

Serverless is also great because we didn’t need a quick response time. In this case, the analysis would be triggered by the UI and then done in the background, so it didn’t matter if there was a warmup time for the lambda functions. Also, it’s cheap to run.

Building on AWS also naturally gives you access to all the great features needed to build a robust infrastructure, like SQS and API Gateway. When the time came to add heavy security on top of our product, that would be straightforward with AWS.

MongoDB

I chose MongoDB because not only did they have a free hosted tier, the schemaless factor worked perfect for this use case, where there was not a great need for doing complex queries or joins across data models. The Jira analysis could be quickly stored in JSON format (basically) for fast retrieval by a key.

They had just added vector search features when I started building this MVP, so I figured if that was something we needed in the future, we could dig in.

Next.js Web App/Frontend API

Now, I’m not really a web frontend dev. I had made some basic apps with React before, but that was a long time ago. Honestly, I was dreading making a web frontend because of how much of a hassle it was during my last experience. I figured (and hoped) that things had progressed a bit since I last had a crack at web frontends.

I hadn’t used Next.js, but after having a look around at what the leading React frameworks were, this was a good fit because of its ability to include APIs and SSR (server side rendering) on top. That, and the fact that it was insanely easy to deploy on Vercel, and I was sold. I'm really happy that the tech has improved so much since I last picked it up.

NextAuth.js

NextAuth supports Atlassian authentication right out of the box, so it was easy to get going with this framework and not fuss too much with getting and storing our Jira token.

Vercel for hosting

I was super impressed with how slick the whole Vercel experience was, how easy it was to configure different environments and integrate everything into your workflows. This allowed us to easily have a prod and dev environment setup super fast just by specifying different branches and then configuring environment variables for each.

OpenAI GPT-4

This was an obvious choice, especially for prototyping and an MVP. We did briefly consider how we could fit in other models into our product, but because it's so easy to get up and running with OpenAI, we went for it. If it became a problem later on, we would handle it then. I also already had quite a bit of experience with GPT-3/4, so that came in handy too.

Shared NPM package for DB access

Shared code makes things easier, though it can be a slight pain to setup.

GitHub Actions for backend CI/CD

CI/CD, while it's not critical to have for an MVP, really makes life easier. Generate a few GitHub actions files with ChatGPT that run tests and deploy automatically and you're good to go.

Building it out

The first step was to build the functions that fetched and calculated the metrics that would tell us how well a project was going. To do due diligence on the Jira API and to validate that it would be feasible to crunch the numbers in the way we wanted, I first made a Python script prototype that could build an analysis for a desired JQL query.

You may wonder why I would make a prototype of the MVP in Python if we didn't plan to ship anything written in Python. Here's the reasons why I would 100% do this again.

- It was less up front investment. Let's say I found out that Jira's API was missing critical features that we would need. It's hard to glean that just from the API docs, so understanding that fast by writing a cheap little script would be far better than finding that out much later.

- It made the work a lot faster, since I could just run the script in the command line with a hardcoded token and see the results immediately. I could then experiment with feeding the data to OpenAI to see how good the results were.

- The code wouldn't be thrown away. Thanks to ChatGPT, I could just throw the prototype script in and ask it to convert it to Typescript, with almost no problems.

If you're interested in checking out the Jira analysis functions, you can find them here.

Ok, we can do this!

After doing a proper technical validation with the script, I moved ahead with building the foundation of the app. We ended up with a simple but solid architecture for the MVP.

The backend code is available on GitHub

Stay tuned for:

- How to break down analysis into smaller tasks

- How I made the analyzer handle huge Jira projects

- How to prompt GPT-4 with enough information to get what you want without blowing up the prompt

Thanks for reading and see you soon!